Virtual production Innovation series #2: Tracking

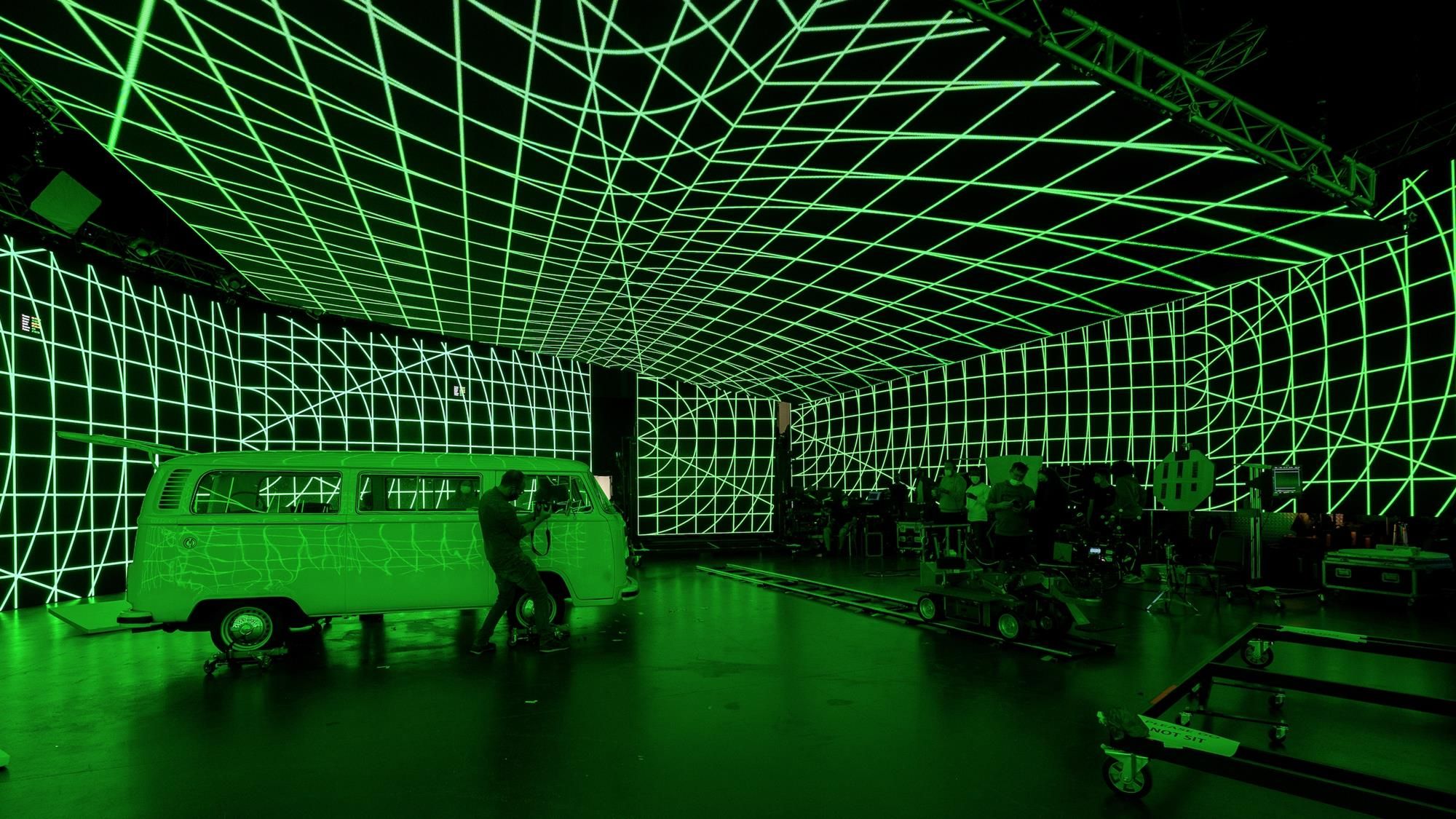

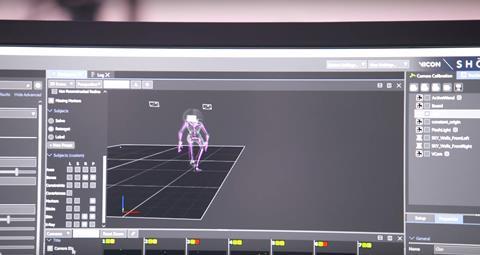

In the second part of the Virtual production Innovation series, Dimension Studios talks about how the team tested camera tracking with a mo-cap suit tracking an actor, alongside camera tracking of a physical prop.

The project was directed by Paul Franklin, creative director at DNEG. He said: 'We put motion capture markers onto a flashlight prop that one of the cast members was holding and then used that input to drive a virtual patch of illumination on the screen ' a pool of light appeared wherever the actor pointed the flashlight. This gave us a degree of spontaneous interaction with the virtual environment that produced a great live feeling in the LED image.'

Franklin continues: 'We also had another performer don a motion capture suit and used their captured performance to drive the real-time movement of a character displayed on the screen. Our live-action actor (still wielding the flashlight) was then able to interact with the character on the screen in real time, which made for a very connected dramatic moment. Latency was noticeable at first, but once we'd gotten used to it we didn't find it a problem at all.'

VIRTUAL PRODUCTION INNOVATION SERIES ' TRACKING

Broadcast teamed up with virtual production and volumetric capture specialist Dimension to present this six-part series of short films providing insight into shooting in LED Volume stages.

The series is focused on the 'Virtual Production Innovation Project','which was designed to explore the state-of-the-art in virtual production, testing different workflows and scenarios.

The project was conceived and produced by Dimension, DNEG, and Sky Studios, and filmed at Arri's Uxbridge studio with support from partners Creative Technology and NEP Group. The aim is to share insights and expertise to the creative community, while helping upskill the next generation of creative talent.

The second episode in the series is below:

Featured in the video:

Tim Doubleday, virtual production supervisor, Dimension

The series of six films explaining different learnings from the project will be released weekly throughout March and April, exclusively on Broadcastnow.

Each episode discusses the tests the team has undertaken and demonstrates some of the advantages of virtual production in capturing in-camera VFX and final pixel shots using an LED volume.

Alongside the film, we present an exclusive interview with one of the key members of the production team, talking about the insights offered in that week's episode and the lessons they learned during the making of the Virtual Production Innovation Project films.

The series will cover lighting, tracking, simulcam, in-camera effects, scanning, and 360 photospheres. Broadcast's interview with Dimension virtual production supervisor Tim Doubleday about tracking is below.

Broadcast interview with Dimension virtual production supervisor Tim Doubleday

Are there additional considerations when mo-cap tracking in front of a LED Volume wall compared to a traditional production environment?''

When running full-body motion capture in a LED wall environment it's important to consider the latency between the motion of the actor and when it appears on the wall. There is an inherent latency between rendering the images and putting them on the wall so as long as you are within this time it should look OK ' there will still be a short delay between the actor and the character on screen. For example, consider a use case where the physical actor would high five a CG character on the wall ' if there is too much latency then the CG character wouldn't react in time and it would look off.

The same thing applies to any props you are tracking. For the torch example, we had to make sure there was minimum delay between the CG torch moving and applying light onto the wall compared to the actor moving the torch in the physical space. The benefit of using the Vicon system to track the camera, props and full-body subjects means that everything is delivered with the lowest possible latency and using the same sync and refresh update as the wall.

An obvious consideration but one worth highlighting is to make sure you use a tracking system that doesn't interfere with visible light or lighting that could affect the look in the camera. For this test, we used passive markers that reflect the IR light but for other shows we use active markers that use IR LEDs on the markers, which are invisible to the film camera and also allow the cameras to run with the strobes off.

Where you place the mo-cap performer is also important. For the Sky shoot, the actor was in the same space as the wall and physical actor. This meant they could play off each other and the actor could see themselves on the screen. This could be distracting though so you might consider having a completely separate full body volume so the actor can focus on their performance and the LED volume doesn't have an actor getting in the way. It's also worth thinking about eye lines and the perspective difference between the physical and virtual characters, even using the tried and tested tennis ball on a stick to infer where the mo-cap actor should be directing their performance could help sell the shot.

What type of productions/applications do you imagine could make good use of mo-cap driven real-time animated CG characters in virtual production?

The key benefit of having a live fullbody character is that it allows the scene to be dynamic and for the physical actor to react to the CG character in real-time. Subtle movements like eye darts and head turns might influence how the actors react to the scene around them. It's hard to deliver final quality CG characters in real-time but by recording both the camera motion and the CG characters motion it's possible to do a traditional VFX pass in post to deliver a higher quality render required for high-end film and TV. I think creature work is a natural fit but it can even be something more abstract. You might have the mo-cap actor puppeteering a particle system that rains down effects onto the physical actor via the wall.

Another use case is where you want your actor to have influence over the CG environment that is applied onto the wall. For example, you might want the actor to wave their hand and have the environment respond, say a virtual light to change or tree foliage to move. By tracking the actor all these things are possible and allow for heightened immersion between the actor's performance and what is viewed on the wall.

I think there is a real opportunity to mix motion capture with live performance, whether it be on an LED wall or for augmented reality experiences. Being able to track physical objects along with the actors opens up a world of possibilities to create stunning interactive experiences that make use of all these incredible virtual production techniques.

)

)

)